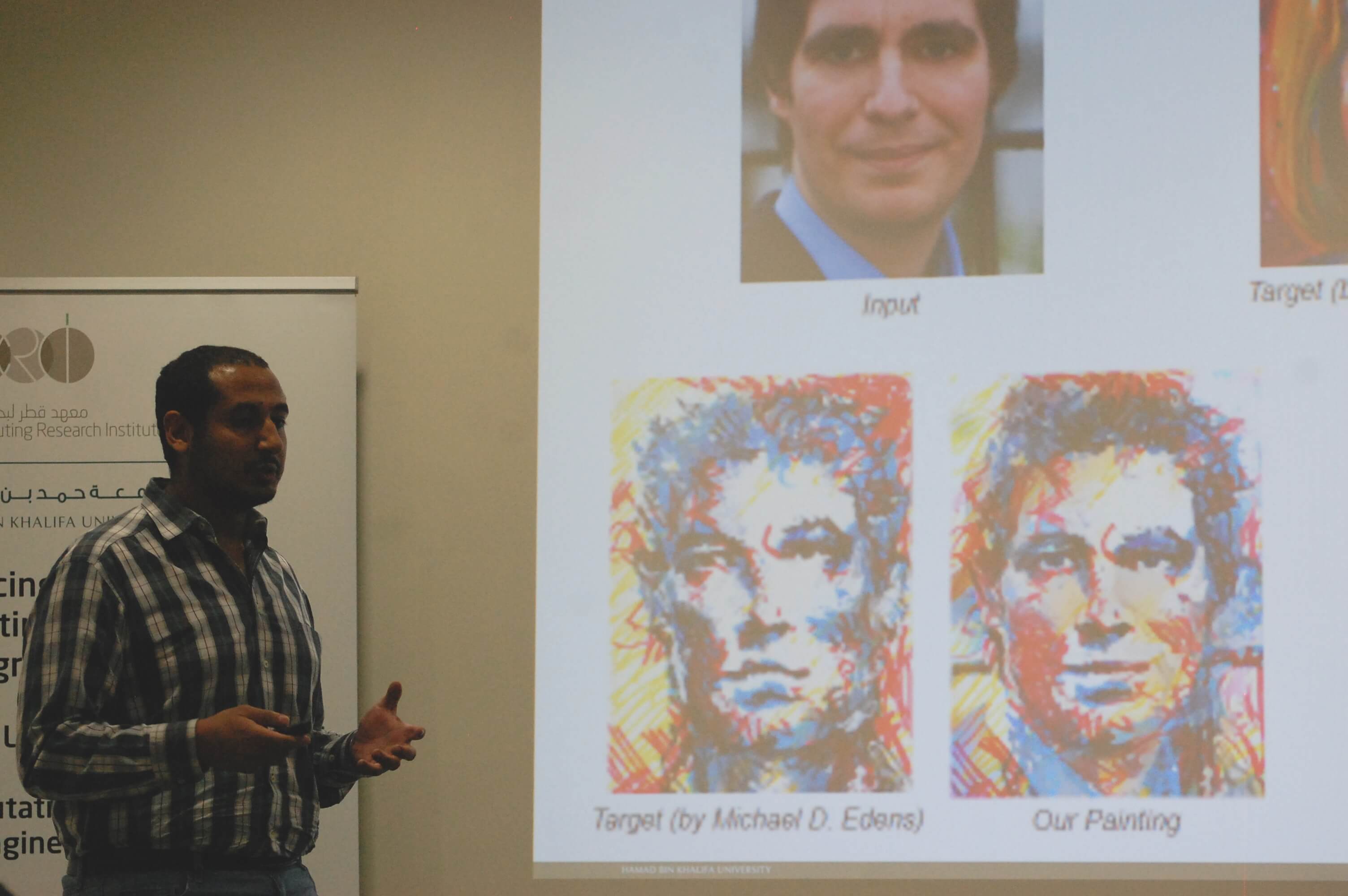

A scientist at the Qatar Computing Research Institute (QCRI), one of Hamad Bin Khalifa University's three national research institutes, has jointly developed technology that can recreate films and portraits in the style of different artists’ paintings.

QCRI’s Dr. Mohamed Elgharib, along with collaborators from Trinity College in Dublin, has devised a technique that can reproduce moving images in an artist’s original style.

A paper about the technology, known as non-photo realistic rendering technique, will be presented at the world’s largest annual event in computer graphics and interactive techniques, the Special Interest Group on Graphics and Interactive Techniques (SIGGRAPH) conference in California on July 24.

The technology goes several steps further than current state-of-the-art advances in recreating the painting styles of artists such as Vincent Van Gogh. For example, this new technique can maintain the structure of underlying objects in portraits, which are usually difficult to reproduce because abnormalities in people’s faces are easily spotted.

“In images like portraits it’s very important to maintain the structure of facial features and current approaches will corrupt them. We can more accurately capture the strokes of an original painting than other techniques,” Dr Elgharib said.

An example of the effect the technique can achieve is the film Loving Vincent, due for release in September. The world’s first full-length painted animation film, which tells the story of the painter Vincent Van Gogh, will be made from 62,450 hand-painted frames completed by 85 painters in Van Gogh’s style.

“Current approaches for generating painted movies are manual and they are very expensive in both time and production cost. The total production budget of Loving Vincent is between 5 to 15 million euros. Our approach, however, is fully automated and much cheaper. We just need some servers and we are ready to go,” Dr Elgharib said.

The technology also lends itself to selfies, Facebook profile pictures and portraits.

Dr Elgharib and other QCRI scientists are now exploring the use of the same features to solve other problems in analyzing virtual reality content.